Advisory: Attack of the Mongolian space evaders! (and other Medieval XSS vectors)

26 Aug 2008

Damage: Filter evasion, cross-site scripting

Exploit: Bypass XSS filters, IPS/IDS, AV, or WAF's with specially crafted white_space characters to execute XSS attacks.

Root Cause: Interpreting syntax replacements

Product Version: Opera 9.51 and earlier

Or should we call this "Druidic magical symbols enable filter evasion and cross-site scripting"...

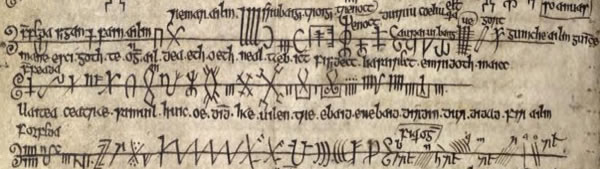

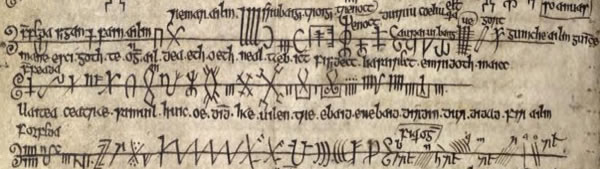

If only I had some Danel Clowe skills to illustrate this! It's not often we can use Ogham Space Marks and Mongolian Vowel Separators to deliver cross-site scripting attacks.

What am I talking about? Okay enough fun, read on...

Opera released version 9.52 of their flagship browser about a month ago to address an issue in the way certain Unicode characters were being interpreted as white space. This behavior enabled cross-site scripting (XSS) attacks which might not otherwise be possible. Perhaps exploiting this issue would also be useful to evade HTML filters, WAFs, or other detection systems which try to prevent XSS attacks.

The HTML 4.01 specification defines four whitespace characters, and explicitly does not define other cases. Note to XSS filter developers: Any character can be treated as whitespace by an HTML4 conforming User-Agent.

The HTML 5 specification defines five types of "space characters", and explicitly nothing else. However, the HTML 5 spec is in flux which is a much bigger issue... more on that later.

The Unicode spec assigns binary property meta-data to code points, one of which is the 'white_space' property. In Opera's case, we could use almost any character with a Unicode white_space property to represent a normal whitespace character like U+0020.

The following characters all get treated as a space. Making things like:

possible. This list includes many of the Unicode characters with the white_space property:

U+2002 to U+200A

U+205F

U+3000

U+180E Mongolian Vowel Separator

U+1680 Ogham Space Mark

Here's a link to the test case: Opera Unicode white_space

Exploit: Bypass XSS filters, IPS/IDS, AV, or WAF's with specially crafted white_space characters to execute XSS attacks.

Root Cause: Interpreting syntax replacements

Product Version: Opera 9.51 and earlier

Or should we call this "Druidic magical symbols enable filter evasion and cross-site scripting"...

If only I had some Danel Clowe skills to illustrate this! It's not often we can use Ogham Space Marks and Mongolian Vowel Separators to deliver cross-site scripting attacks.

What am I talking about? Okay enough fun, read on...

Opera released version 9.52 of their flagship browser about a month ago to address an issue in the way certain Unicode characters were being interpreted as white space. This behavior enabled cross-site scripting (XSS) attacks which might not otherwise be possible. Perhaps exploiting this issue would also be useful to evade HTML filters, WAFs, or other detection systems which try to prevent XSS attacks.

The HTML 4.01 specification defines four whitespace characters, and explicitly does not define other cases. Note to XSS filter developers: Any character can be treated as whitespace by an HTML4 conforming User-Agent.

The HTML 5 specification defines five types of "space characters", and explicitly nothing else. However, the HTML 5 spec is in flux which is a much bigger issue... more on that later.

The Unicode spec assigns binary property meta-data to code points, one of which is the 'white_space' property. In Opera's case, we could use almost any character with a Unicode white_space property to represent a normal whitespace character like U+0020.

The following characters all get treated as a space. Making things like:

<a href=#[U+180E]onclick=alert()>possible. This list includes many of the Unicode characters with the white_space property:

U+2002 to U+200A

U+205F

U+3000

U+180E Mongolian Vowel Separator

U+1680 Ogham Space Mark

Here's a link to the test case: Opera Unicode white_space

Interesting though! You never know what could go wrong. =)

1) white space assigned as its binary property

or 2) Zs assigned as its general category

will have the same effect.

Did you run these tests manually or create a harness?

Interesting point, I should dig deeper to why it happens. =) Gotta check it out tonight. I just started looked into such character evasion stuffs.

Well, I tested it manually. Generated the pages with the scripts and appended the results to a list which I come back to see later on. That isn't the smartest, that I should use Selenium to do the automation to start all the other browsers. ( It was a private repository for manual testing )

How would you automate browsers? I'm like to hear some other alternatives, been looking into them a bit.

So I trigger the page by opening the page with the scripts as a new page. Due to poor specification, I have to split the scripts to 8000~10000 tags ( so, 7 pages ). It is slow and waste of time to click many browsers.

An automated way :

1. Follow your setup.

2. Selenium setup a script to load the pages one by one, with different browsers.

3. WaitForPageToLoad(LONG_PERIOD_OF_TIME).

There you go, execute the selenium it should do its own job. What is bad is that you still need to write a short script.

I have been trying to figure out a way to do automated AJAX crawling... and that means I can't rely on scripting for each particular website. I am starting to believe it isn't going to happen that way... and you need to do a simple HTTP request crawler and then do a more comprehensive scan with site-specific scripts.

I am still thinking...

You should parse and re-generate HTML, outputting only known safe elements, attributes and their values.

Otherwise *any* difference in parsing of arbitrarily broken HTML may be used to evade the filter.

OR just don't allow unescaped HTML. Translate less-than character to equivalent entity. That's it!