Building an SDL Program - Part 1 - Where to start?

15 Mar 2017I'm writing a series on building an SDL program, the plans, the unexpected, the gotchas, and the good stuff. Security Development Lifecycle (SDL) is a phrase that comes from Microsoft, who pioneered the structure and processes that I am most familiar with. I spent a bunch of years as a security vendor for Microsoft while they were building up and rolling out the SDL, and I became so intimate with the process and helping product teams through it, that my company was asked to become one of a few select members of the SDL Pro Network (thanks Katie!).

Microsoft has recently gone into detail about their own SDL story, how it came about, and where it took them. It's a great read to get some valuable context, history, and perspective. If you're at ground zero and want to build an SDL program at your company, expect a long, bumpy road ahead, but rest assured that a good plan, with willingness to be flexible, will get you there.

Setting the Stage

For this article I'm going to assume you have a company of a few thousand people, and maybe 400 in the application engineering discipline. That size makes a good middle ground for this series, as aspects can be scaled up or down pretty easily.

The company has a development culture, but not an application security one. Your software products have been in production for many years, and things are at a point where major rework is underway. The development teams are preparing for a new product launch based on a brand new architecture. It's all still in the early experimental phase, before any design decisions, and to top it off product teams are adopting DevOps-style Agile processes with continuous integration and continuous delivery (CI/CD) goals.

Executive Support

SDL doesn't happen without full executive support. The integration of security practices like threat modeling and pen testing do add time to release cycles - it's extra work. And it's more painful early in the transition, before the practices become a part of the culture, when they are constantly in mind, but don't feel like they're in the way. The leadership team should understand that this program isn't just about implementing technical controls, it's more about implementing a practice, new habits, which require an intrinsic culture change. The good news is, while many people who haven't experienced this concept of building security in to the development process typically dread security reviews and meetings, in my experience going through this process with them causes the reverse to happen - development staff actually start to look forward to having security meetings.

First Steps - Interviews and Maturity Assessment

You may have a sense of where your organization is at with regard to application security in the development processes, and you're almost certainly right. Nevertheless it's still a good idea to do a proper assessment to document the organization's maturity level. That assessment will serve as the starting point and reference for further discussions. The other value in an assessment is using it to measure the organization against other orgs in your industry.

Since you're going to be meeting and interviewing people during this phase, some other goals to keep in mind include:

1) Start prioritizing teams

Use the interview process as a way to identify high-priority product teams and understand their release schedules. When I think high-priority I think of customer-facing and Internet-facing apps. The CISO or security lead you're working with may already have an idea of what products these are, but that could change as you learn things along the way.

2) Start finding friends

Use the interview process to identify folks who are the most open and interested in security and SDL - you will want to partner with them for the later pilot phase.

The Building Security in Maturity Model (BSIMM) is a valuable tool for measuring maturity, it's a yearly study of dozens of companies from across various industry verticals. The BSIMM was born out of a desire to gather real data about SDL implementations. As such, the people behind the study didn't approach companies with a pre-canned list of measurements. Rather they went to the companies to learn what those measurements should be.

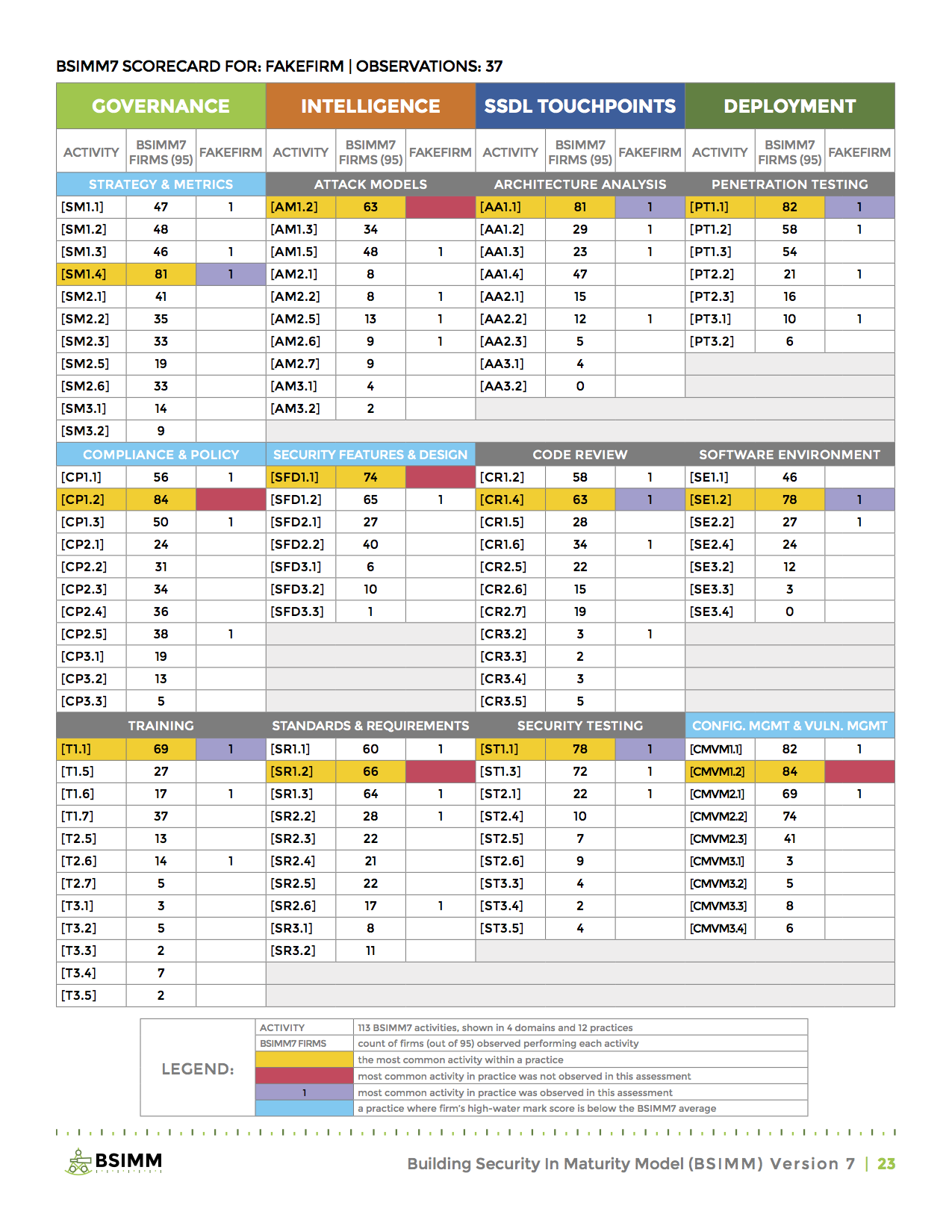

The image below shows a sample BSIMM7 scorecard ripped straight out of the BSIMM7 report. To actually perform an assessment means you will need to do some work:

- Identify key stakeholders in the development organization. These are product owners, managers, directors, and developer leads.

- Setup a 1 hour meeting with each stakeholder.

- Using the BSIMM activity list, identify and mark which activities are implemented in the org.

- During this meeting, collect some other data that describes development workflows, tech stacks, and methodologies that may help to further inform the assessment.

Alternatively you could prepare a questionaire, but I find it more helpful to meet in person and talk through things. Also alternatively, since the BSIMM activity list is pretty big, you could use the Microsoft SDL Optimization Model as the measuring stick. I was able to extract 52 practices, or activities, for my own assessment needs. Almost all of these map to something in the BSIMM, and whereas the BSIMM scorecard below might be a little too much for some people to look at, a similar Microsoft SDL scorecard would be smaller and easier to understand.

Let's explain this scorecard a bit. The BSIMM framework organizes 113 discrete activities into 12 practice areas, which are further organized into 4 domains:

- Governance

- Strategy and Metrics (SM)

- Compliance and Policy (CP)

- Training (T)

- Intelligence

- Attack Models (AM)

- Security Features and Design (SFD)

- Standards and Requirements (SR)

- SSDL Touchpoints

- Architecture Analysis (AA)

- Code Review (CR)

- Security Testing (ST)

- Deployment

- Penetration Testing (PT)

- Software Environment (SE)

- Configuration Management and Vulnerability Management (CMVM)

The four domains are represented as pillars in the scorecard, with three practice areas in each. If you look at the DEPLOYMENT pillar, and the first practice area called PENETRATION TESTING, you will see 7 discrete activities, each further organized into 3 maturity levels. The idea here is that you can measure your own penetration testing maturity against these 7 activities. The most basic maturity level would be represented by PT1.1, PT1.2, and PT1.3. Increasing maturity levels are represented by PT2.x and PT3.x. As you can see, a high majority of firms studied (82) had a basic pen testing activity impelmented. That count goes down as the maturity level goes up. This is to be expected, most firms will be less mature, only a few will have reached high maturity.

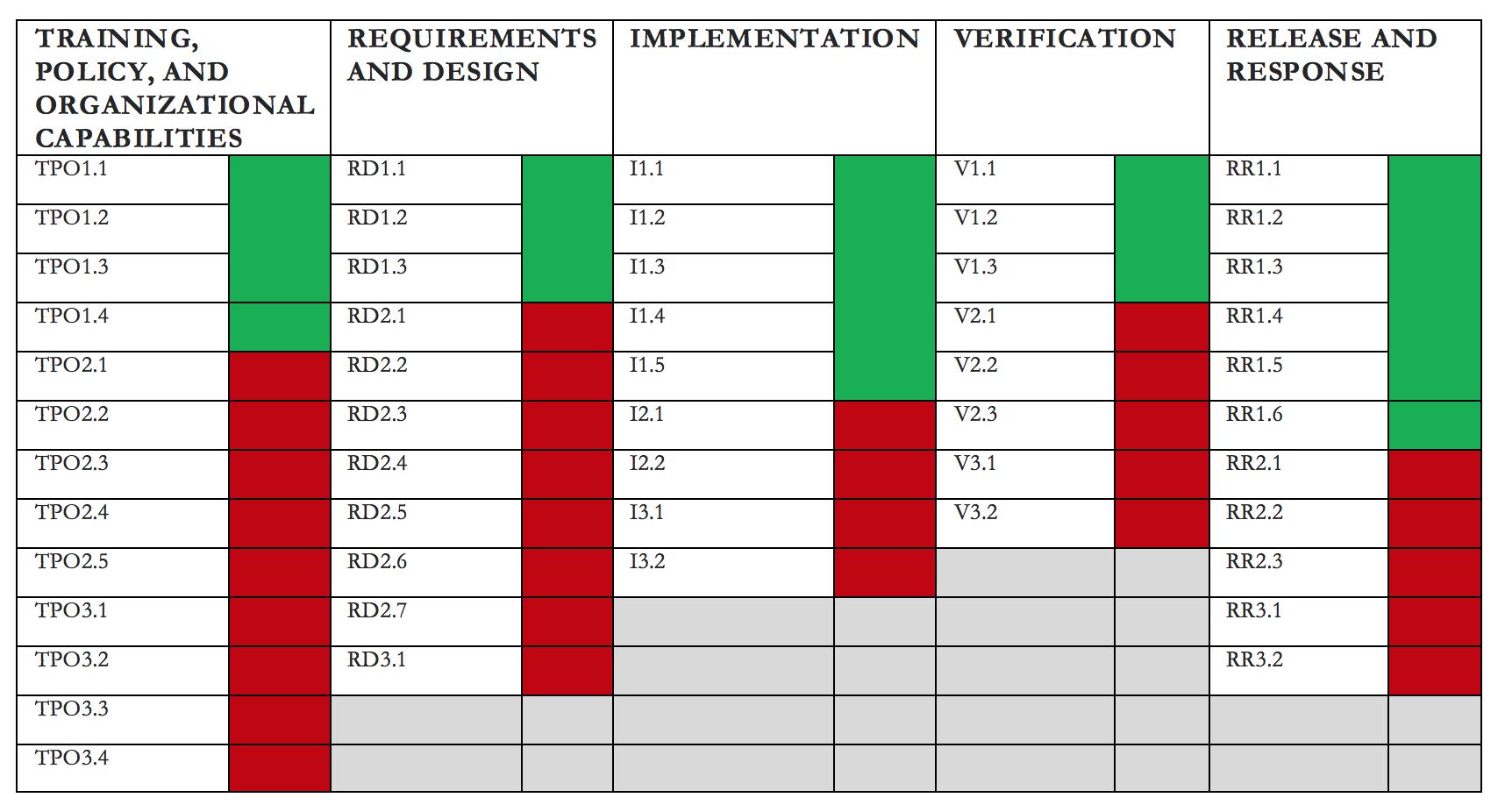

Now, if you decided to go with the Microsoft SDL Optimization Model as the reference, you would wind up with a scorecard like the following:

This takes a bit of explaining as well. You probably won't find this scorecard anywhere else, because I made it for my own purposes. It represents 52 specific activities spread out across 5 phases of the SDLC. Each phase is a pillar, and within each pillar, activities are ordered from a basic, level 1 maturity, to a more advanced, level 3 maturity. In this example, the Verification pillar shows that the organization meets all three Microsoft SDL activities for level 1 maturity - V1.1, V1.2, and V1.3. But they do not meet the more advanced level 2 and 3 activities.

Lessons Learned

One of the reasons I wanted to write about this was to talk about some of the lessons I learned personally or have seen others face. In the opening stage here, when the landscape is still pretty green field, it doesn't seem like there's a lot that could go wrong. At this stage it seems more about setting expectations and paying care and attention to others so you can manage those expectations. Some things to keep in mind at this stage:

Understand where the organization fits into the BSIMM

Data from the BSIMM is organized into industry verticals. If you are showing the organization how they compare to one of those verticals, be ready to explain the data in a little more detail. For example, if the organization is a health care insurer, you may show them BSIMM's radar chart of the health care industry. Well, suddenly 'health care industry' becomes a very vague term, and you may be faced with questions like - is that data from insurers or providers? Is that data from our competitors? Digging deeper shows that health care data in BSIMM encompasses more than just insurers, and includes medical tech companies as well. It's better to know the details of what you're comparing against.

Describe the vision

Executives may be patient, but they will feel much better having a rough ETA of when they will get their SDL program. It's too early for promises, but you could set some rough target dates for getting a couple pilot projects through a minimal SDL process. Beyond that you could set some farther goals for rolling a basic SDL out to a high-priority subset of the organization. If that included say 10 small product teams of 10 people each, you might suggest 6 - 12 months before they are incorporating SDL practices. Going from ground zero to functioning SDL will not happen fast, it's usually a cultural change which means a year minimum to get practices introduced and working in a rudimentary way.

Next Steps

Stakeholders will likely get the value of embedding security practices into the development process, and they might already start asking what the SDL rollout looks like, and how they can prepare their teams. Be ready to talk about the plan at a high level and present a one-page overview of the rollout. Ensure them that this will work in a way that best fits the organization's needs and workflows, and that activities will be very opportunistic and not forced, at first = )

Having a proper assessement document provides the launching point for moving forward. In the next article I'll take a look at building a roadmap that will get you to a basic SDL program implementation. Having the scorecard will remain valuable as it can be revisited later on and over time for management to measure your firm's maturity level and watch SDL progress.